INtime uses physical memory to load and run the INtime kernel and its associated applications. The INtime kernel provides allocation services to processes allowing them to acquire physical memory. It also provides virtual memory services to allow processes to access the physical memory allocated to them.

When the INtime kernel starts, it receives a pool of physical memory to manage. In INtime for Windows this memory is allocated from the Windows Non-Paged Pool, at boot time, or in some cases INtime memory may be pre-allocated by the Windows boot-loader. The memory allocated to the INtime kernel is used to load the kernel, and the remainder is managed as the memory pool of the root process. All system and application processes created after INtime kernel initialization receive an initial allotment of memory from the root process which becomes the initial memory pool of the new process.

Traditionally the INtime kernel has provided a 32-bit physical memory space. That means that all physical memory addresses were 32 bits in length, providing a total accessible physical memory range of 4 GB. Beginning in INtime version 6.2 the Extended Physical Memory feature reorganizes the physical memory manager to allow access to all of the RAM installed in a PC. This is made possible by the PAE ("Physical Address Extension") feature of the paging system of the CPU. One or more memory areas may be allocated to each node by configuration.

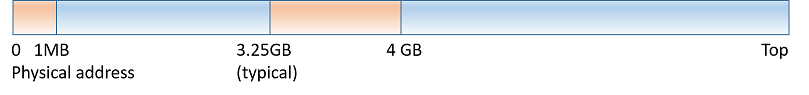

The following diagram illustrates the memory map of a typical PC system. Certain address ranges (colored orange) are reserved for I/O and firmware, with RAM accessible in the other ranges.

The PC architecture maps some of the RAM overlapping the reserved ranges above the top of installed memory range, so that more of the installed RAM can be used and is not wasted by the reserved ranges. This means that the top memory address is actually a little higher than the total memory installed. For example, on a typical PC with 8 GB of RAM installed, the "Top", or the highest physical RAM address, is typically at about 8.5 GB. The reason this is important is when memory allocation sizes are calculated, and because memory has different uses above and below the 4 GB boundary.

Without the Extended Physical Memory feature, only 32-bit physical addresses can be generated, limiting physical memory to below 4 GB. With the Extended Physical Memory feature, an INtime application is still a 32-bit application and addresses only a 32-bit linear range of addresses. However, the paging hardware of the CPU can generate physical addresses across the maximum physical address range of the CPU. This means that the memory in which the application runs does not have to be physically located below 4 GB. Granting access to the memory above 4 GB allows for greater use of memory in a given application. In an XM process (see definition below) it is possible to map up to 3.75 GB of RAM at one time, and each process can access its own separate 3.75 GB.

Most existing applications do not have to change to use Extended Physical Memory because they do not have to know about where their physical memory is actually located. However, there are some exceptions.

Even though the 32-bit physical memory address limitation is removed there are still some applications which require memory from below 4 GB, for example applications using hardware which itself can only generate 32-bit addresses for DMA. Some memory below 4 GB will be required to provide accessible buffers for that hardware. This is typical in older 10/100 Mbit Ethernet interfaces, and most USB controllers for example. When programming such hardware devices, use of some new system calls to manipulate physical memory accesses above 4 GB is required: see MapRtPhysicalMemory64 and GetRtPhysicalAddress64 for example.

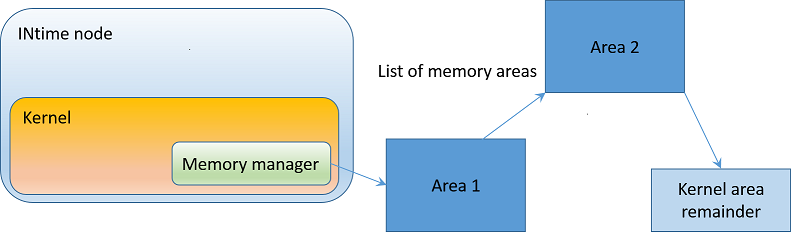

With the Extended Physical Memory feature, memory is allocated to each node in a number of physical areas. These areas are allocated as contiguous non-paged memory during the Windows boot phase and assigned to each node according to the system configuration. The first area (the "kernel area") is fixed and is used for loading the kernel and for allocating any fixed system memory resources. The second and subsequent areas ("application areas") are managed by the kernel's memory manager for use by applications. Each application area has a configurable size and may be allocated from memory below or above the 4 GB boundary, or allocation may be left up to Windows. Any memory left over from the kernel area is managed by the kernel and added to the area list after the application areas.

This default allocation for a new node is a single 32 MB area from memory below 4 GB. For compatibility reasons, any previous memory configuration imported from a version of INtime prior to version 6.2 into a current version of INtime will create a kernel area, then a second area equal to the size of the previously-configured kernel area.

When allocating memory in general, the areas are searched in the order they are assigned to the memory manager. In addition, an application can choose to guide the memory manager as to where the allocated memory comes from. An extended system call, AllocateRtMemoryEx, has parameters to set the floor and ceiling for the range of physical addresses from where the memory is to be allocated. For example, to ensure that memory is allocated from below 4 GB, set an upper limit of 0x100000000L in the call:

DWORD memsize = (3 * 1024 * 1024);

LPVOID p = AllocateRtMemoryEx(memsize, 0L, 0x100000000L, 0);

This ensures that the memory is allocated from between 0 and 4 GB.

The reasons for configuring multiple areas for your node include the following:

In the second case, if a relatively large amount of memory is required for the application and allocating from the Windows non-paged pool, Windows may not be able to allocate the memory required. Memory can be used from above 4 GB if the system has a lot of memory added to your system, or the allocation might be split into smaller pieces, four 512 KB areas, for example, instead of a single 2 GB area. This configuration may be more likely to succeed. The operational difference between these two configurations is that your maximum single allocation would be reduced to 512 KB, with no other penalty.

Windows has a limit of 4 GB for a single mapping, which restricts the size of a single area to 4 GB if allocated from the non-paged pool. If a larger area is required, use the "Exclude memory from Windows" attribute for the area.

Another configuration option creates a memory area but does not assign it to the kernel for management, but allows the application to manage it directly. For example, if a very large area of memory for data buffering is required. This memory may then be mapped directly from the application and managed directly. Discover the area through the GetRtSystemMemoryInfo system call. An unmanaged area will have a flags value indicating the unmanaged status and gives the physical address and size of the area.

The Windows boot-loader may be configured so that not all of the system RAM is managed by Windows. This is done by excluding memory starting from the top of the RAM array. INtime can be configured to use this excluded memory. Use excluded memory if difficulty is experienced in allocating enough memory from the Windows non-paged pool, or if very large areas (greater than 4 GB in size) are required.

Prior to the introduction of the Extended Physical Memory feature, the memory ceiling was always configured so the excluded memory for INtime's use would fit below 4 GB, because INtime could only address a maximum of 4 GB. It was also configured so that all of INtime's memory had to be allocated from the Windows non-paged pool, or else all of INtime's memory excluded from Windows. With the new feature enabled on 64-bit Windows, the ceiling is set above 4 GB and the decision whether to use excluded memory or non-paged pool memory is made on a per-area basis, allowing for greater flexibility and utility.

In the INtime Distributed RTOS deployment model, initially the boot node owns all of the memory on the platform. When additional nodes are created, physical memory is allocated from the boot node's pool. One or two allocations are made for each application node.

Base memory is physical memory from below 4GB. Each node needs at least 32 MB of base memory to load the kernel and essential services. 64 MB is the default allocation.

Extended memory is physical memory from above 4GB. Its use is optional but it is recommended when relatively large amounts of memory are required.

An INtime process cannot access physical memory directly. Instead, each process owns a memory space, which is a range of memory addresses starting at zero and expanding to some limit. These memory addresses are called Virtual Memory and the range of addresses available to the process is organized in a system object called the Virtual Memory Segment, or VSEG. The VSEG is initially empty and physical memory is mapped into the VSEG either by the loader when the process is created, or by allocation routines. The process of mapping physical memory allocates a range of address from the VSEG and makes the physical memory visible through that range of addresses. The virtual address returned to the caller is used to access the physical memory. If a process attempts to map or allocate memory and there is not enough space in the VSEG to complete the action, the system returns status E_VMEM.

Not every address in the VSEG has valid physical memory mapped to it and if an attempt is made to access an address which is not valid a Page Fault exception is generated. The range of addresses from 0 through 4095 is always reserved, so that for example any attempt to dereference a NULL pointer will always generate a Page Fault exception.

The VSEG size for a process is determined by a number of factors, with the default set to 8 MB, the minimum allowed. The VSEG needs to be large enough to cover the areas where code and data are to be loaded in the application. In addition, if the Pool Maximum value of a process is set to a value other than the default then the loader will increase the size of the VSEG to match - it is assumed that all of the physical memory allocated to a process can be accessible at once. The default VSEG size for a given process can also be configured by the linker, and it can be overridden by the loader using the /vseg command-line parameter. It is also possible to override it in the CreateRtProcess (and the equivalent ntxCreateRtProcess) system call with the VsegSize field of the PROCESSATTRIBUTES structure passed to the call.

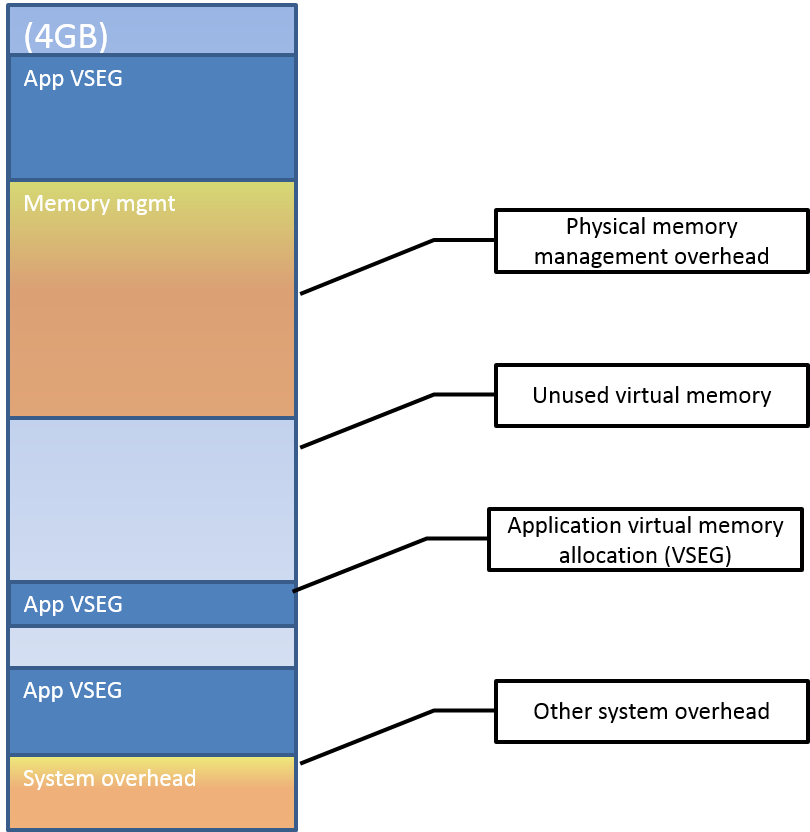

The original design of the INtime system (before version 6) is such that the kernel manages a total of 4 GB of virtual memory for the entire kernel and all of the applications loaded on it. Thus the total of the sizes of all of the VSEGs cannot come to more than 4 GB. In addition the kernel uses some virtual memory space for managing the physical memory pool, thus reducing the total virtual memory available to applications. A new feature, called XM processes (see below), was introduced in INtime version 6 to overcome these limitations. The maximum VSEG size for non XM mode is fixed at 3.75 GB.

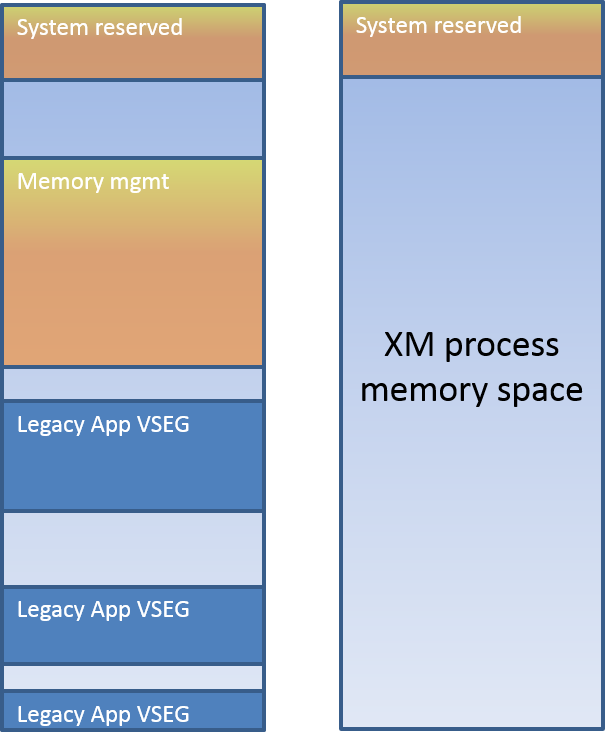

This figure shows how the 4 GB of virtual memory space is divided between the various system overheads and to create VSEGs for applications.

Virtual memory is organized in units of a page (4096 bytes). A higher-order organizational unit is the page table, which under PAE mode is 2 MB and non-PAE mode is 4 MB. Allocations of virtual memory will attempt to be fitted into page table areas; if an allocation does not fit in the remaining space of a page table, then it will be allocated at the start of an empty page table. An allocation which does not start at the beginning of a page table will get an additional page of virtual memory in front of the allocation as the "guard page", an empty page between allocations.

The preceding information will give rise to some non-optimal allocations in certain circumstances. For example, in a PAE-enabled (Extended Physical Memory) configuration, a series of 1 MB allocations will "waste" about half of the available virtual memory space. This is because the first allocation will start at the beginning of a 2 MB page table area, but the second allocation will not fit, because of the additional guard page, so it will be allocated at the beginning of the following page table. In contrast, a 2 MB allocation will fit in a page table because of the first allocation in a page table does not add a guard page, therefore a series of 2 MB allocations will provide the best fit in the virtual memory allocation scheme.

In many systems, an add-in card contains memory that RT threads in the INtime environment need to access. Use MapRtPhysicalMemory (or MapRtPhysicalMemoryEx/MapRtPhysicalMemory64) to make this contiguous, page-aligned, page-granularity memory accessible. If other processes in the INtime environment need access to this memory, the address may be converted to a handle via CreateRtMemoryHandle and mapped as with other shared memory handles, as explained below, or else the second process can also call MapRtPhysicalMemory. Once the mapping is no longer needed, use FreeRtMemory to delete the mapping and to free the associated virtual memory.

By default memory present on such I/O cards is not cached by the CPU memory controller, but further control over the caching attributes of mapped memory may be performed using the extra flags of he MapRtPhysicalMemoryEx/MapRtPhysicalMemory64 system call.

You can find usage information about a process' VSEG by calling GetRtVirtualMemoryInfo.

An XM ("eXtended Memory") process is a new class of process introduced in INtime version 6. An XM process has its own separate 4 GB of virtual memory space and does not use a VSEG allocated from the system virtual memory space. The top 256 MB of the virtual memory range for an XM process is reserved for use by the system but the remaining 3.75 GB may be used by the process.

XM processes are not available when the INtime for Windows kernel is configured to be loaded in Shared mode. On a shared mode system all processes are loaded in non-XM mode by default.

An XM process has two main advantages: there is no practical limit on the virtual memory space available to the process for mapping memory, and there is also a performance benefit on processors of the Intel ATOM family.

There are a couple of minor drawbacks in using an XM process which should be taken into consideration: because it has its own separate virtual address space, some system calls result in a switch between the process' and the system's address space. This is also true for thread switches between threads in an XM process and threads in another process, including processes in the default memory space. There is also a small additional overhead in handling an interrupt when an XM process is currently running, again due to the necessity to switch between different address spaces. The overhead in each case is small but may be significant in a heavily-loaded system.

An XM process is created by the loader when the /XM switch is used, or if the XM flag has been set in the INtime settings in an INtime project. Applications which were created in versions of INtime prior to version 6 will be loaded as non-XM processes by default.

This figure shows the original system memory space, still used by the kernel and by legacy applications, and a new memory space created for an XM process. Each XM process has its own 4 GB virtual memory space. The first memory space is known as the Default memory space, or System memory space.

From INtime 6.2 onwards, the area used for "Memory mgmt" is no longer required.

Each INtime process has a physical memory pool, and two parameters associated with the pool.

The Pool Minimum value is the amount of memory initially allocated to the process at process creation time. When the process is loaded the loader ensures that the pool minimum is sufficient to load and start the application. The default setting is to allow the loader to calculate it.

Each process also has a Pool Maximum value assigned to it. This is the maximum amount of physical memory the process can allocate to itself. When a process allocates memory this memory first comes from the pool minimum already allocated to the process. If all of that memory is exhausted, then the process borrows memory from its parent process until it reaches the Pool Maximum value. After that, an attempt to allocate more physical memory results in a E_MEM status. The default value for this parameter is to allow the process to borrow as much memory as possible from its parent with no limit.

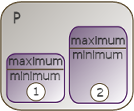

This figure shows two processes whose memory pools have been allocated from the memory pool of the root process.

P is the pool of the root process. Its size is 8192 K.

Since the total minimum size for the processes is 500 K, both processes can be created. Since the total maximum size is 5000 K, the pools can reach their maximum sizes simultaneously.

When threads use INtime kernel system calls to create objects, the memory associated with these objects comes out of the memory pool of the calling thread's process. Likewise, threads satisfy their dynamic memory needs by using INtime system calls to allocate and reallocate memory into and out of their own virtual address space (see Virtual Memory section, above).

The Pool Minimum and Pool Maximum values may be overridden in the Visual Studio project settings for the application. The values may be found in the INtime project settings. The values may also be overridden at load time. Either use the /pmin and /pmax parameters for the ldrta.exe command or else change the fields of the PROCESSATTRIBUTES structure of the CreateRtProcess call (or its equivalent ntxCreateRtProcess).

An application can read basic statistics of its pool using the GetRtPhysicalMemoryInfo system call, which returns information about pool min, pool max, the size of the process pool, how much memory is allocated to the process and how much memory is yet available.

Memory in a process pool is unallocated and unusable until it is requested by threads in the process. A request for memory is explicit when you call AllocateRtMemory and implicit when you create a system object. Other memory management interfaces such as malloc in C or new in C++ ultimately call AllocateRtMemory to allocate physical memory.

System objects have various sizes, depending on the type, and are paragraph (16-byte) aligned. Allocation of memory using AllocateRtMemory is always in multiples of a system page (4096 bytes) and is always aligned on a page boundary. Other system calls which allocate memory (such as the C library malloc) ultimately result in an internal call to AllocateRtMemory.

An extended call, AllocateRtMemoryEx, allows restrictions to be placed on the physical memory allocated, such as upper and lower boundaries, and physical address alignment.

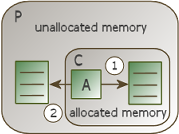

When trying to create a system object or allocate memory and the unallocated part of the process's pool is too small to satisfy the request, the INtime kernel tries to borrow more memory, up to the pool's specified maximum, from the current process' parent process. This figure illustrates borrowing memory:

When process C is deleted, the memory in its pool becomes unallocated, and it returns to the pool of the parent process.

Borrowing increases the pool size of the borrowing process and is restricted to the process's maximum. If a process has equal pool minimum and maximum attributes, its pool is fixed at that common value, and the process cannot borrow memory from the parent process.

Call GetRtPhysicalMemoryInfo to find out how much memory has been allocated to a process and how much has been borrowed, This supersedes an older call, GetRtProcessPoolInfo, which is limited to 32-bit physical memory fields and may not be able to return the information in particular for the root process.

A thread can share contiguous portions of its memory with threads in another process by creating a shared RT memory handle for this memory. The handle can then be passed to a thread in the other process through a mailbox (for example), or by cataloging it in an object directory. Threads within the same process already share the same address space, and so can expose memory allocation pointers to each other via global variables.

Create a shared RT memory handle using CreateRtMemoryHandle. After all external processes no longer need to access the shared memory defined by the RT handle, use DeleteRtMemoryHandle to delete the RT handle and thus limit the allocated memory to local use only.

See also Memory Synchronization Intrinsics .

These system calls relate directly to memory management:

Obtain the process data segment to use in CopyRtData| To . . . | Use this system call . . . |

|---|---|

| Allocate physical memory into the thread's virtual segment | AllocateRtMemory AllocateRtMemoryEx |

| Allocate memory from RT kernel. | ntxAllocateRtMemory |

| Copy data directly between RT memory objects | CopyRtData |

| Copy data directly between NTX process space and an RT process memory region. Use this function when you want to omit the mapping step | ntxCopyRtData |

| Create handles for sharing memory between processes | CreateRtMemoryHandle |

| Delete handles used for sharing memory between processes | DeleteRtMemoryHandle |

| Move allocated memory out of the thread's virtual segment | FreeRtMemory |

| Free RT memory | ntxFreeRtMemory |

| Returns virtual address of the memory object with an offset | GetRtLinearAddress |

| Return a physical address for a valid buffer described by call parameters | GetRtPhysicalAddress GetRtPhysicalAddress64 |

| Obtain information about the physical memory used and available to a process | GetRtPhysicalMemoryInfo |

| Obtain the value of the data segment handle for the process | GetRtProcessDataSegment |

| Determine the size of a memory area given its handle | GetRtSize ntxGetRtSize |

| Obtain information about the system memory configuration for the local node | GetRtSystemMemoryInfo |

| Obtain information about the virtual memory used and available to a process | GetRtVirtualMemoryInfo |

| Map a region of physical memory into the thread's virtual segment |

MapRtPhysicalMemory |

| Map a memory segment into the thread's virtual segment | MapRtSharedMemory ntxMapRtSharedMemory ntxMapRtSharedMemoryEx |

| Remap a pointer to a new physical memory address | RemapRtPhysicalMemory RemapRtPhysicalMemory64 |

| Synchronize shared-memory access between nodes without using system calls | Synchronization intrinsic functions |

| Return system resources consumed by ntxmapRtPhysicalMemoryList | ntxUnmapRtPhysicalMemoryList |

| Return system resources consumed by ntxMapRtSharedMemory | ntxUnmapRtSharedMemory |

| Verifies that a buffer pointer is a valid pointer | ValidateRtBuffer |

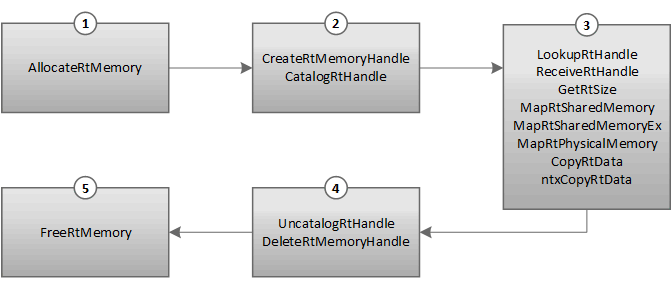

This shows the order to make memory management system calls and lists calls that memory operations frequently use: